Whistleblowers: raising the alarm on safety issues

Overview

A whistleblower is a person who “blows the whistle” to alert the public of a situation that they believe is dangerous or morally wrong and which is not being handled correctly by the people in charge. In general usage, whistleblowing means making a disclosure in the public interest. Concerning safety, the term has a narrower meaning of reporting things that may constitute a threat to people or the environment, such as the presence of a risk which has not been properly managed.

In more detail, a typical whistleblower situation has the following characteristics:

the whistleblower has privileged access to information, for example being the employee of a company or an administration

they learn of a situation that they believe is dangerous or morally wrong

they warn their supervisor or other colleagues of the situation

their warning is ignored, for example because they are not believed (they may be seen as a “troublemaker”), or their interpretation of the situation is disputed (for example “that’s not really dangerous, and changing it would be very expensive”), or their warning is politically uncomfortable for people with the ability to change the situation (for example, they may be warning about the consequences of a decision made by the supervisor)

after more than one warning raised through normal internal channels (talking with their supervisor, issuing a safety report, etc.), the whistleblower decides to use some other channel to raise attention to their concerns, such as an inspectorate, the media or the legal system.

Whistleblowers play an important role in exposing hazardous situations or immoral activities. They often pay a personal price for their effort in favour of the collective good, because other individuals who were aware of the situation reported but who did not report it themselves may feel that they have been betrayed by the whistleblower (“you don’t snitch/tell on your colleagues”), leading to social stigma and other forms of retaliation.

A few safety-related examples

Herald of Free Enterprise disaster. In 1987, a car ferry named the Herald of Free Enterprise capsized in the Belgian port of Zeebrugge, leading to the death of 193 passengers and crew. The ship had left port with its front door open. The investigation into the accident found that employees had raised concerns on five previous occasions about the ship sailing with its doors open. A member of staff had even suggested installing an indicator light on the bridge to indicate whether the doors were closed, but the suggestion had been rejected as too costly. The inquiry concluded: “If this sensible suggestion […] had received the serious consideration it deserved this disaster might well have been prevented”.

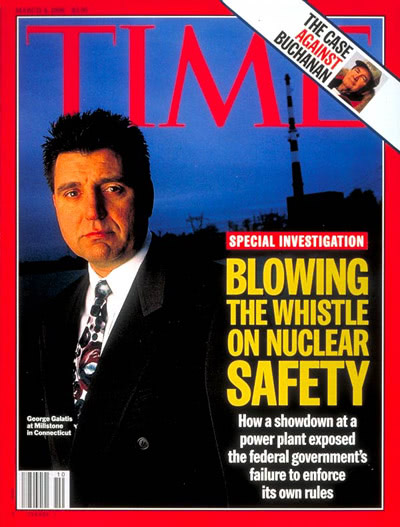

Millstone Nuclear Power Station, USA. In 1996,

Georges Galatis, a nuclear engineer employed by Northeast Utilities,

made the cover of Time magazine after having “blown the whistle” on

inappropriate routine use of emergency refueling operations at the

nuclear power plant where he worked. During refueling operations, which

occur every 18 months, the power plant was replacing all its nuclear

fuel instead of only a third of the fuel, and was allowing a much

shorter cool-down period before moving the fuel to a spent-fuel pool

than required by regulations. These shortcuts reduced the duration

during which the nuclear power station was unavailable (refuelling

periods were two weeks shorter than they should have been), improving

profitability for the operating company, but greatly increased the risk

of the fuel overheating the pool,One worker later informed Galatis that during fuel

change procedures, which were sometimes organized as a form of race

between crews, the reactor on which they were working had so much

residual heat that his plastic-soled boots melted.

melting down and causing large radioactive releases.

Georges Galatis, after discussing the issue with a colleague, who

confirmed that the procedure used was non-standard but that the

operating company would not welcome scrutiny on the issue,Northeast Utilities had a reputation for cutting

corners and firing employees who raised safety concerns. An internal

report at the plant where Galatis worked found that failure to follow

procedure was common and management were willfully non-compliant. A

study by the NRC found that the number of safety and harassment

allegations filed by workers at the company was three times the industry

average. Two dozen employees claimed that they were fired because they

raised safety concerns.

reported the situation to his managers in 1992.

Management tried to cover up the issue and refused to report it to the

US NRC, the safety regulator for the nuclear sector. Galatis decided to

report the situation himself to the local representative of the US NRC

(along with other safety issues he had discovered in the meantime,

including inadequate resistance to earthquake risks), and discovered

that the regulator was aware of the issue but had decided not to

intervene. Galatis continued to push the issue with the NRC, who in 1996

put the Millstone plant on its watch list after finding many safety

issues on the plant. In 1997, the NRC fined the operating company for

multiple violations.

George Galatis resigned from his position in 1996 after agreeing to a settlement with his employer. He stated that he had been harassed by his employer, passed over for interesting work, referred to human resources, and avoided by his coworkers after he called attention to the safety failings on the plant. He spent the rest of his career outside the nuclear industry. The NRC later introduced a regulation to protect whistleblowers from retaliation from their employer, so that people would be less afraid of reporting safety concerns.

Ladbroke Grove railway catastrophe. A head-on

collision between two passenger trains at Ladbroke Grove in London in

1999 killed 31 people and injured more than 400. One of the trains

passed through a red “stop” signal, which was preceded by a yellow

“prepare for red” signal. The red signal that was not respected by one

of the trains was known to be dangerous due to its poor visibility,

having been passed eight times in the previous six years; the inquiry

into the accident found that the train driver, who was inexperienced,

most likely had not seen or had misinterpreted the signal.The area around Paddington where the collision occurred

sees significant amounts of high speed and bidirectional rail traffic,

and the signals are some of the most complicated in the UK. The signals

were further obscured by a bridge and by recently installed overhead

electrical systems [Cullen 2001].

During the Cullen inquiry, a former operations manager with British Rail

stated that “In over 45 years in the industry, I have never seen such a

confusing set of options to a driver”.

Factors that contributed to the accident include

inadequate training for one of the train drivers, poor visibility of the

signal compounded by blinding light from the sun at the time of the

accident, and inadequate response from the railway control center. The

accident could also have been prevented by the system-wide installation

of an automatic train protection system. A cost-benefit analysis had

concluded that the safety benefits of such a system did not justify its

high cost (the cost per statistical death averted was estimated at 4 M€,

compared with the 1.4 M€ threshold used at the time). A post-accident

analysis confirmed the numbers used in the study.However, a less expensive system called Train

Protection & Warning System was implemented in the UK from 2000

onward.

The signal passed in the accident was known to be dangerous, and the

rate of signals passed at danger (SPAD) in the area was known to be

“exceptionally high”,Report by the railway infrastructure company in

1993.

but no work had been planned by the railway

infrastructure company to improve its visibility. A report indicated

that the signal was located in a curve, was partially obscured and

intermittently visible to a driver. An HSEThe UK Health and Safety Executive was at the time the

safety authority for railways.

report indicated that the signal was partially obscured

by overhead power lines, that a nearby bridge could produce dazzle, and

that the signal was “susceptible to swamping from bright sunlight”. An

expert review of the visibility of signals had not been undertaken by

the infrastructure company for several years in the area, despite

several requests for such a risk assessment. The operations and safety

director of one railway operating company, Ms Foster, wrote several

letters to the railway infrastructure company concerning signaling in

the Paddington area and the specific signal involved in the collision.

One letter in 1998 stated “I should be grateful if you would advise me,

as a matter of urgency, what action you intend to take to mitigate

against this high risk signal”. A subsequent letter in 1999 (which

received no reply) stated “This is clearly not the manner in which to

manage risk and an approach to which I am strongly opposed. Therefore, I

suggest that an holistic approach is taken to SPAD management in the

Paddington area and all changes to infrastructure or methods of working

are properly risk assessed.” The inquiry into the accident found that

the Paddington area of the railway system was characterized by an

“endemic culture of complacency and inaction” [Cullen 2001, 137].

The British railway system had seen large organizational changes in the past few years, following the privatization of British Rail and its separation into more than 100 separate companies. The resulting inter-organizational complexity seems to have contributed to the accident. The infrastructure company, responsible for the design and visibility of signals, has an incentive to consider signals passed at danger as a driver error issue, rather than digging into contributing factors such as signal design. During the inquiry, the infrastructure company defended the design of the signal, indicating that though the approach was complex, its location should be known to all train drivers. The railway infrastructure company did not respond to urgent and repeated requests from a highly-ranked representative of an operating company to improve the safety of a dangerous signal. The accident, which occurred in close succession with two other railway accidents, led to major changes in the formal responsibilities for management and regulation of safety of UK rail transport.

Legal protection

In an increasing number of countries, whistleblowers benefit from legal protection which protects them from retaliation by their employer:

The oldest legislation on the topic is the UK Public Interest Disclosure Act of 1998, which was passed in response to the Herald of Free Enterprise catastrophe described above and the Clapham Junction railway accident in 1988. It protects employees who make certain kinds of disclosures (including those concerning health and safety issues in the workplace) from being disciplined, fired or passed over for promotions. Protected disclosures can be made to “prescribed persons” which include the UK Health and Safety Executive and UK Environment Agency. The UK government maintains guidance and a code of practice for employers concerning whistleblowing.

In the USA, the Whistleblower Protection Act was adopted in 1989, and includes in its scope reports on “substantial and specific danger to public health and safety”. The Occupational Safety and Health Administration OSHA runs a whistleblower program to protect employees from retaliation from their employers after reporting a safety issue.

An EU directive on the protection of whistleblowers was approved in April 2019. After being published by the Council of Ministers, it will be transposed into the law of all EU member states.

Embedding the value of dissent in the organizational culture

Given the importance of whistleblowing for safety (as well as for issues such as corporate corruption and other ethical violations), companies should implement policies that protect whistleblowers, and provide them with internal channels to raise concerns. Relevant sources of information on this topic:

PAS 1998:2008 Whistleblowing arrangements code of practice, British Standards Institute, 2008

Evidence collected by research projects such as Whistling while they work can help inform policy development

However, whistleblowing is an extreme behaviour, and companies should seek to foster an organizational culture which encourages dissenting views and avoids “organizational silence”, a concept developed by management theorists in the beginning of the century [Morrison and Milliken 2000; Milliken, Morrison, and Hewlin 2003; Pinder and Harlos 2001]:

the withholding of any form of genuine expression about the individual’s behavioral, cognitive and/or affective evaluations of his or her organizational circumstances to persons who are perceived to be capable of effecting change or redress.

Indeed, in complex systems, the boundaries between safe and unsafe operations are imprecise and fluctuate over time. Organizations are exposed to competing forces that lead to practical drift, and people’s attitudes and beliefs change over time. Therefore, sources of danger, safety models and organizational safety barriers should be regularly debated and challenged. The presence of conflicting views on safety should be seen as a source of insights and learning opportunities, rather than a problem to be stamped out [Antonsen 2009]. Organizations need to maintain requisite imagination, the “fine art of imagining what might go wrong” [Westrum 1993; Adamski and Westrum 2003].

Alfred P. Sloan, the former chairman of General Motors, is reported to have told an assembly of the company’s chief executives:

Gentlemen, I take it we are all in complete agreement on the decision… Then I propose we postpone further discussion of this matter until our next meeting to give ourselves time to develop disagreement and perhaps gain some understanding of what the decision is all about.

Similarly, a common practice in law firms recruiting students directly from law school is to assign them the task of writing a legal argument for an opponent of the firm’s client (the adverse lawyer, or prosecutor). Only once you have understood the best argument your opponent can make, are you able to defend your client to the best of your ability.

A related concept at the level of small workgroups is psychological safety, a shared belief within a workgroup that people are able to speak up without being ridiculed or sanctioned.

Finally, the accounting/auditing profession has developed the concept of “risk glass ceiling” which can prevent internal safety managers and audit teams from reporting on risks that originate within higher levels within their organization (for example, board-level decisions to reduce maintenance expenditure). This can lead to “board risk blindness”, as seen at BP Texas City (USA, 2005).

References

Adamski, Anthony, and Ron Westrum. 2003. Requisite imagination: The fine art of anticipating what might go wrong. In Handbook of Cognitive Task Design, edited by Erik Hollnagel, 193–220. CRC Press,

Antonsen, Stian. 2009. Safety culture and the issue of power. Safety Science 47(2):183–191. [Sci-Hub 🔑].

Cullen, Lord William. 2001. The Ladbroke Grove rail inquiry: Part 1 report. HSE Books, http://www.railwaysarchive.co.uk/documents/HSE_Lad_Cullen001.pdf.

Milliken, Frances J., Elizabeth W. Morrison, and Patricia F. Hewlin. 2003. An exploratory study of employee silence: Issues that employees don’t communicate upward and why. Journal of Management Studies 40(6):1453–1476. [Sci-Hub 🔑].

Morrison, Elizabeth W., and Frances J. Milliken. 2000. Organizational silence: A barrier to change and development in a pluralistic world. Academy of Management Review 25(4):706–725. [Sci-Hub 🔑].

Pinder, Craig C., and Karen P. Harlos. 2001. Employee silence: Quiescence and acquiescence as responses to perceived injustice. In Research in Personnel and Human Resources Management, edited by Ferris Gerald, 331–369. Emerald Group Publishing Limited,

Westrum, Ron. 1993. Cultures with requisite imagination. In Verification and Validation of Complex Systems: Human Factors Issues, edited by J. A. Wise, V. D. Hopkin, and P. Stager. Springer. [Sci-Hub 🔑].

Published: