The defence in depth principle

A layered approach to safety barriers

Overview

Defence in depth is a safety philosophy

involving the use of successive compensatory measures (often called

barriers, or layers of protection, or lines of defence)

to prevent accidents or reduce the damage if a malfunction or accident

occurs on a hazardous facility. Barriers should, as far as possible, be

independent, meaning that the failure of one barrier

does not affect the effectiveness of other barriers. The philosophy

ensures that safety is not wholly dependent on any single element of the

design, construction, maintenance or operation of the facility.

Defence in depth is a safety philosophy

involving the use of successive compensatory measures (often called

barriers, or layers of protection, or lines of defence)

to prevent accidents or reduce the damage if a malfunction or accident

occurs on a hazardous facility. Barriers should, as far as possible, be

independent, meaning that the failure of one barrier

does not affect the effectiveness of other barriers. The philosophy

ensures that safety is not wholly dependent on any single element of the

design, construction, maintenance or operation of the facility.

The concept is ancient, dating back to the design of military forts

which used multiple layers of defence including moats, outer walls,

inner walls and towers.It’s also related to the ancient idiom “Don’t put all

your eggs in the same basket”, as attributed to a wise man in the novel

Don Quixote.

Applied to safety, it has been the most strongly codified

in the nuclear power sector, where it is described in the INSAG 10

reference document [INSAG 1996]. The

philosophy is applied in many other industrial sectors. It is also used

in the security and cybersecurity communities.For cybersecurity, see in particular IEC 62443-1-1

Industrial communication networks – network and system

security, chapter 5.4.

The objectives are as follows:

compensate for potential failures of system components or mistakes made by humans working within the system;

maintain the effectiveness of the barriers by averting damage to the plant and to the barriers themselves;

protect the public and the environment from harm if the barriers are not fully effective.

The initial, basic interpretation of the defence in depth principle considered the independent physical layers surrounding the hazard source (concerning a nuclear reactor for example, a first level is the cladding that encases the fuel, a second level is the reactor vessel, and a third level is provided by the containment building). Progressively, safety specialists began to adopt a more conceptual interpretation of layers, including the influence of non-physical layers of defence such as emergency response and human and organizational factors of safety.

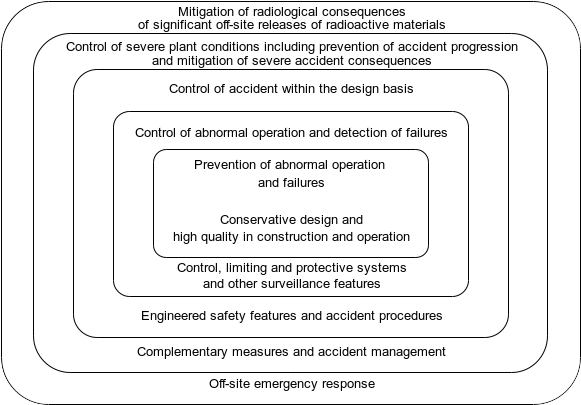

The IAEA formulation of the defence in depth principle specifies five levels of defence:

- Prevent deviations from normal operation

- Detect and control deviations

- Incorporate safety features, safety systems and procedures to prevent core damage

- Mitigate the consequences of accidents

- Mitigate radiological consequences

It is important for the implementation of defence in depth to be periodically verified and tested, to ensure that changes made to the system have not weakened its effectiveness [IAEA 2005].

The defence in depth principle can be used both during the design of a new system, and during a safety assessment of an existing system. During design reviews, analysts will use criteria such as the single failure criterion [IAEA 1990], which requires that a system designed to perform a defined safety function must be capable of meeting its objectives assuming the failure of any major component within the system or an associated system which supports its operation. The objective of this type of analysis is to identify potential design weaknesses which could be resolved by an increased amount of redundancy or the use of alternative mechanisms (diversity to protect against common-cause failures).

The single failure criterion is sometimes understood to include

errors made by the human operators“An operator error is a single incorrect or omitted

action by a human operator attempting to perform a safety-related

manipulation”, according to a US standard ANSI/ANS-58-9-1981 “Single

Failure Criteria for Light Water Reactor Safety-Related Fluid

Systems”.

of the system. Requiring systems to tolerate incorrect or

omitted safety-related actions of the operator is often quite

difficult.

The defence in depth principle underlies many widely-used risk analysis methods, such as the Layer of Protection Analysis (LOPA) method [CCPS 2001]. It is also used by regulators (in particular in the nuclear power industry) in their assessment of the adequacy of safety controls implemented by a plant operator.

Critiques of the principle

One possible negative affect of this design principle was identified by Jens Rasmussen, who pointed out that unless the effectiveness of barriers was regularly monitored, people working within the system would develop compensatory measures (they would adapt their behaviour) that reduced the level of safety intended by system designers.

One basic problem is that in such a system having functionally redundant protective defenses, a local violation of one of the defenses has no immediate, visible effect and then may not be observed in action. In this situation, the boundary of safe behaviour of one particular actor depends on the possible violation of defenses by other actors. [Rasmussen 1997]

Rasmussen called this idea the “defence in depth fallacy” [Rasmussen 1990]:

In systems designed according to [the defence in depth design principle], an accident is dependent on simultaneous violation of several lines of defence: an operational disturbance (technical fault or operator error) must coincide with a latent faulty maintenance condition in protective systems, with inadequacies in protective barriers, with inadequate control of the location of people close to the installation etc. The activities threatening the various conditions normally belong to different branches of the organisation. The presence of potential of a catastrophic combination of effects of local adaptation to performance criteria can only be detected at a level in the organisation with the proper overview. However, at this level of the control hierarchy (organisation), the required understanding of conditionally dangerous relations cannot be maintained through longer periods because the required functional and technical knowledge is foreign to the normal management tasks at this level.

Defence in depth limits access to information that is essential for adaptation. The notion of practical drift is important in understanding how the safety of systems designed using the defence-in-depth principle can erode over time.

Another criticism is that by increasing the number of barriers, the

total complexity of the system increases, which in itself increases the

level of risk. This is particularly true if the barriers are not fully

independent. It’s important to note that full

independence is hard to achieve, since barriers often depend on some

common underlying infrastructure, such as the electricity supply, water

supply, cooling system, access path for the control wiring,A fire in insulation at the Browns

Ferry nuclear power plant (Alabama, USA) in 1975 was started by an

engineer who was using a candle to check that a temporary seal (made

from very flammable material) on cables was airtight. The fire spread

via the cables to a room on the other side of the wall, and its extent

was not identified until major damage had occurred on two units of the

plant. Several safety systems, supposed to be independent, were disabled

due to their control cables all following the same cable rack. Since

this incident, a lot of care is taken during design to ensure that

control and power wiring for independent safety systems do not share

common cable racks.

ventilation, or more subtle features such as a clock

signal in the case of electronic equipment. Their independence can be

weakened by the adaptive behaviours of humans in the system, as noted

above by Rasmussen.The classical illustration of dependence is a process

whose operation is monitored both by a human operator and by an

automated alarm mechanism. The human monitoring and alarm mechanism

might have been assumed to be independent safety barriers by the system

designers, meaning that their simultaneous failure is very unlikely (in

mathematical terms, its probability is the product of the probability of

each barrier’s failure). In real operation, the human operator will know

that the alarm system is present, and will adjust their behaviour to

rely partially on the alarm, leading to a lower level of vigilance than

if the alarm were not present. Hence, the level of safety resulting from

the two barriers is not as high as if they were truly independent.

Furthermore, they are typically managed and maintained by

the same people. These common dependencies can lead to a common

mode failure, which defeats multiple layers of defence

simultaneously.

Related accidents

The Fukushima Daiichi nuclear accident illustrates a poor implementation of the defence in depth concept [NEA 2016]. Indeed, the earthquake and subsequent tsunami caused all the 6 power lines connecting the facility to the electric grid to fail, and also destroyed all but one of the on-site emergency power supplies.

The accident at unit 2 of the Three Mile Island nuclear power plant

in 1979 (Pennsylvania, USA), which led to a fuel meltdown and small

radioactive releases outside the plant, had a strong impact on nuclear

safety provisions worldwide, as well as on the public perception of

risks related to nuclear power generation. The accident,

which developed slowly over several days, was triggered by a relief

valve in the cooling system which was stuck open, but whose failure was

not understood by the operators of the reactor. The accident sequence is

quite complex, with a combination of issues related to plant design,

performance of control systems, human machine interfaces, training of

operators, and information sharing based on operational experience

feedback. The accident illustrates both the broad effectiveness of the

defence in depth principle (despite a major failure of several safety

systems, there was almost no leakage of radiation to the outside

environment) and the relevance of its underlying assumption that there

are limits to the effectiveness of each layer (or level) of protection

designed into a complex system.Indeed, an NRC NUREG report on the accident noted that

“unanticipated interactions and interrelationships among and between

systems and the operators and the possibility of undetected common modes

of failure are a bound on the assurance of any level of prevention”

[NUREG 1979].

Conclusion

Defence in depth is widely regarded as being a useful and important principle for designing safe systems and for ensuring that safeguards put in place during the design process are not weakened by later modifications to the system. It is not the only design principle that can provide guidance when thinking about the safety of a system: a useful complement is the systemic approach to safety which aims to analyze the system as an integrated whole and the interactions between technology, humans and organizational features.

Photo credits: Bodiam castle by Matthew Millen, CC BY-NC-ND licence.

References

CCPS. 2001. Layer of protection analysis: Simplified process risk assessment. Wiley-Blackwell, ISBN: 978-0816908110.

IAEA. 1990. Application of the single failure criterion. IAEA safety series n°50-P-1. Vienna. IAEA, ISBN: 92-0-123790-1.

IAEA. 2005. Assessment of defence in depth for nuclear power plants. Safety Reports Series 46. IAEA, ISBN: 92-0-114004-5. https://www.iaea.org/publications/7099/assessment-of-defence-in-depth-for-nuclear-power-plants.

INSAG. 1996. Defence in depth in nuclear safety — INSAG 10. Vienna. International Nuclear Safety Group, AIEA, ISBN: 9201025963. http://www-pub.iaea.org/MTCD/publications/PDF/Pub1013e_web.pdf.

NEA. 2016. Implementation of defence in depth at nuclear power plants: Lessons learnt from the Fukushima Daiichi accident. Regulatory guidance report 7248. OECD Nuclear Energy Agency. DOI: 10.1787/9789264253001-en.

NUREG. 1979. TMI-2 lessons learned task force final report. NUREG-0585. US Nuclear Regulatory Commission. https://www.nrc.gov/docs/ML0614/ML061430367.pdf.

Rasmussen, Jens. 1990. Human error and the problem of causality in analysis of accidents. Philosophical Transactions of the Royal Society of London. B, Biological Sciences 327(1241):449–462. DOI: 10.1098/rstb.1990.0088.

Rasmussen, Jens. 1997. Risk management in a dynamic society: A modelling problem. Safety Science 27(2):183–213. DOI: 10.1016/S0925-7535(97)00052-0.

Published:

Last updated: