Safety culture

A contentious and confused notion

Overview

The concept of “safety culture” is notoriously nebulous, and a wide

range of definitions have been proposed by researchers over the past 30

years, ranging from simple ones such as “the way we ensure safe

operations around here” and “what people at all levels of an

organization do and say when their commitment to safety is not being

scrutinised” This definition is due to psychologist James

Reason.

to more explicit ones:

The safety culture of an organisation is the product of individual and group values, attitudes, perceptions, competencies, and patterns of behaviour that determine the commitment to, and the style and proficiency of, an organisation’s health and safety management. Organisations with a positive safety culture are characterised by communications founded on mutual trust, by shared perceptions of the importance of safety and by confidence in the efficacy of preventive measures.

ACSNI Human Factors Study Group: Third report - Organising for safety, HSE Books, 1993.

There are different interpretations of the concept of safety culture in the literature, perhaps the biggest gap being between authors who see safety culture as something that an organization possesses or does not (P. Hudson being a prominent researcher with this approach) and others, closer to the tradition of research into organizational culture, who see safety culture as the intersection between the organization’s culture (something that all organizations develop over time) and safety management practices.

In this latter interpretation it is important to note that safety

culture is not always a positive influence on safety: the organizational

culture may constitute a reservoir of tacit knowledge on safe ways of

working, act as a soft coordination mechanism, and encourage people to

maintain a questioning attitude,A questioning attitude is one of the components of

safety culture that has been identified in the nuclear power sector. One

definition from the US NRC is “individuals avoid complacency and

continuously challenge existing conditions and activities in order to

identify discrepancies that might result in error or inappropriate

action”. A questioning attitude helps to prevent “group think” by

encouraging diversity of thought and intellectual curiosity.

challenging beliefs and practices and enhancing

imagination about possible accident scenarios, but it may also insulate

organizational members from dissenting points of view, penalize deviance

from group normsInfluential psychologist Irving Janis described a

phenomenon he called “groupthink”, which arises when the desire for

conformity and cohesiveness in a group leads all members to minimize

conflict and critical evaluation of ideas, leading them to dysfunctional

decisions [Janis 1972].

Though this is more a group-level phenomenon than one occurring at an

organizational level, similar negative effects can be generated by

features of a strong organizational culture.

and perpetuate myths that encourage illusion of control

[Clarke 1993].

Schein’s model of organizational culture

A commonly-used definition for the term culture, due to the psychologist Edgar Schein who led pioneering research on organizational culture starting in the 1980s, is:

Culture is a pattern of basic assumptions — invented, discovered or developed by a given group as it learns to cope with its problem of external adaptation (how to survive) and internal integration (how to stay together) — which have evolved over time and are handed down from one generation to the next.

Organizational culture can be thought of as consisting of three interrelated levels, shown in the figure below (adapted from [Schein 1985]).

The “deepest” level of an organization’s culture is composed of its

basic assumptions and beliefs: what people find important, what they

think contributes to performance, what performance means, the stories

that members tell to newcomers in the organization. These are

intangible, tacit (not written down or verbalized) and taken-for-granted

attitudes and beliefs. The second level is that of values, shared

principles, rituals, standards of behaviour and goals. It’s also the

public statements about the organisation’s values and rules of behaviour

(how the members represent the organization to themselves and to

others).The public statements relevant here are not so much the

corporate value statements published by many companies, but rather the

statements made by frontline workers and the levels of management close

to operations. Corporate value statements tend to be somewhat

meaningless, and in fact some studies have found a slightly negative

correlation between (a) these company value statements and the values

declared by top-level executives, and (b) the values perceived and

stated by company employees in anonymous surveys. For instance, a

company that states “integrity” as one of its corporate values is less

likely to be seen by employees as operating with integrity than a

company that does not include this word in its corporate value statement

[Sull, Turconi, and Sull 2020]. For a

dark humour take on this, watch the video This Is How Companies

REALLY Come Up With ‘Organisational Values’ by the authors of

“Wankernomics”.

Finally, the “surface” level is composed of the

artefacts, the physical environment, interaction mechanisms, official

policies, office jokes, dress code, and other visible features of how

members of the organization work together.

Note that the artifacts level of organizational culture consists of aspects that are fairly easy to see when you visit the premises of an organization, but to understand the reason for these aspects you need to try to understand the values that lead to these artifacts, and the assumptions that generate these values. The “inner” levels are not directly visible, but can be uncovered by observing how people interact and asking about their reasons for doing things in certain ways and their perceptions of what is appropriate behaviour in different situations.

This model is criticized as being excessively simplistic; some authors suggest that more levels such as symbols and rhetoric should be included. There is also an implicit assumption in this model that organizations are monolithic/unitary, which is also an oversimplification.

Organizational culture is something that an organization is, not something that the organization has, implying that it is not an element that can be modified quickly or easily.

On the slow nature of cultural evolution

[Moghaddam and Crystal 1997] analyze the evolution of the values of egalitarianism, hierarchy, autonomy and conservatism in the context of authority relations (particularly the treatment of women) in Iran, China and Japan over several centuries. They find that despite large political and economic changes in these countries over this period, the underlying values and resulting social practices have changed very slowly.

[Schwartz, Bardi, and Bianchi 2000] analyzed whether the basic values held by citizens of Eastern and Central Europe changed over a 10-year period following the collapse of the communist regimes at the end of the 1980s. In the 40-year period preceding the collapse, citizens of these countries were subjected to life-long “political education” in communist ideology. The collapse of communism led to enormous changes in the political and legal systems and in economic institutions and opportunities. However, the researchers found very little change in values such as conservatism, hierarchy, egalitarianism, intellectual autonomy and affective autonomy in the decade following the collapse of communism.

A famous analysis [Putnam 1993] of democratic institutions in Italy and the impact of civic engagement argues that the significant differences between the north and south of the country can be traced back to different political systems around 1000 years earlier, when northern Italy had a relatively horizontal (democratic) system of governance, and southern Italy had a feudal and autocratic system.

Large organizations are generally characterized by the presence of subcultures which differ from the dominant organizational culture. Subcultures within an organization are formed when members with common identities or job functions gather around their own interpretations of the organizational culture. Organizational theorist Edgar Schein identified three subcultures that are present in many organizations: executives, whose primary concerns are financial performance, engineers, who resolve problems using technology and specialist knowledge, and operators, who run the systems within the organization. There are often also much narrower subcultures present within complex organizations, depending on age, occupation, seniority, shift and previous occupation [Mearns et al. 1998].

Difference with safety climate

A similar concept that emerged at around the same time as safety culture is safety climate, with important work dating back to [Zohar 1980], who defined it as “shared employee perceptions about the relative importance of safe conduct in their occupational behavior”. Safety climate is often described as consisting of shared perceptions of safety-related issues (management commitment to safety, rule adherence, safety training, procedures, working conditions etc.) whereas safety culture is more associated with safety-related values, assumptions and norms. Organizational climate can be viewed as a bottom-up (flowing from employee perceptions) indicator of the underlying core values and assumptions that form the organization’s culture [Zohar and Hofmann 2012].

In practice however, many people today use the two terms interchangeably.

Dimensions of safety culture

James Reason suggested [Reason 1990] that safety culture is composed of a number of dimensions, illustrated below.

[Turner 1992, 199] listed four dimensions of a “good” safety culture:

the establishment of a caring organizational response to the consequences of actions and policies;

a commitment to this response at all levels, especially the most senior, together with an avoidance of over-rigid attitudes to safety;

provision of feedback from incidents within the system to practitioners;

the establishment of comprehensive and generally endorsed rules and norms for handling safety problems, supported in a flexible and a non-punitive manner.

Pidgeon and O’Leary argue [Pidgeon and O’Leary 2000] that a “good” safety culture might reflect and be promoted by four factors:

- Senior management commitment to safety;

- Realistic and flexible customs and practices for handling both well-defined and ill-defined hazards;

- Continuous organizational learning through practices such as operational experience feedback;

- Care and concern for hazards shared across the workforce.

These attempts to decompose the concept of safety culture into a small number of dimensions that are each fairly easy to understand (and perhaps even to assess) are attractive, but it should be noted that there is no strong evidence that these dimensions do indeed cover the same characteristics as the more general concept described above. (Some evidence concerning the “informed culture” dimension is provided in [Westrum 2004].)

Safety culture assessment

Safety culture can be “assessed” (characterized along a number of dimensions) using different methods: questionnaires, interviews, focus groups and observations [Eeckelaert et al. 2011]. In practice, quantitative questionnaires are the simplest and cheapest assessment method and the most widely implemented in industry.

Many questionnaires exist on the market. Sample dimensions covered by

questionnaires are:This illustration is taken from the Nordic

Occupational Safety Climate Questionnaire (NOSACQ-50), which is

available online in 35 languages.

- Management safety priority, commitment, and competence

- Management safety empowerment

- Management safety justice

- Workers’ safety commitment

- Workers’ safety priority and risk non-acceptance

- Safety communication, learning, and trust in co-workers safety competence

- Trust in the efficacy of safety systems

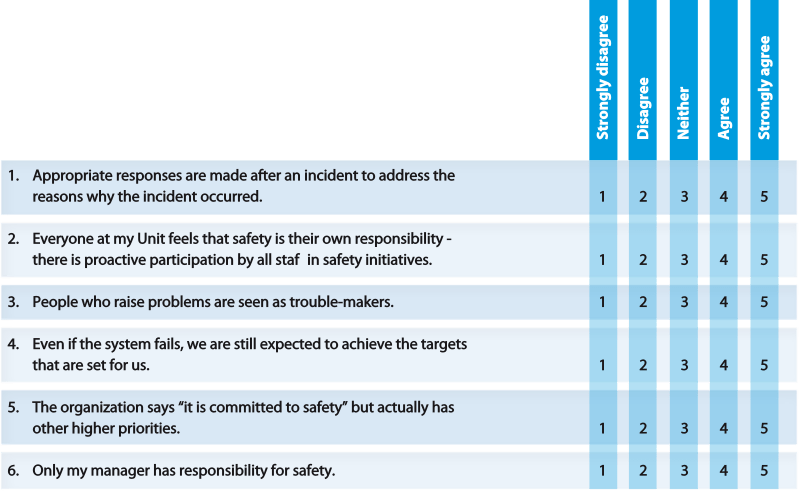

Some example items (typically answered on a 5-point Likert scale) are:

- Management encourages employees here to work in accordance with safety rules, even when the work schedule is tight

- We who work here break safety rules in order to complete work on time

The figure below shows some of the questions used by Eurocontrol in

the safety culture questionnaires it runs in European air traffic

management service providers.Source:

Eurocontrol/FAA

white paper on Safety Culture in Air Traffic Management

(2008).

Questionnaire responses can be presented graphically as shown in the figure below. Such plots enable multiple types of comparison, between sites (locations), departments, or hierarchical level for instance.

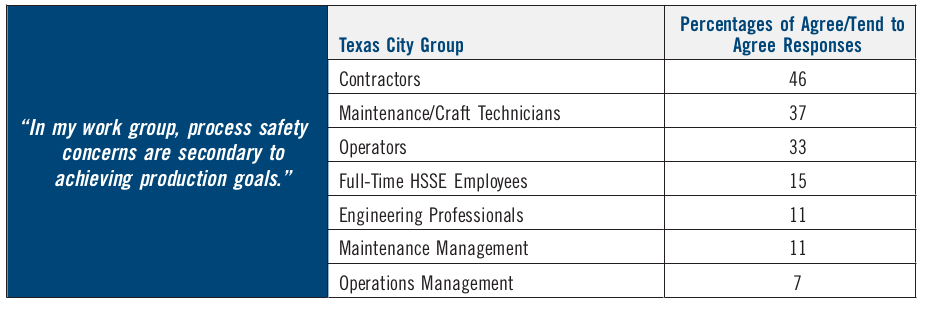

The table below, extracted from the Baker Panel report into the 2005

BP Texas City accident [Baker et al.

2007], illustrates how certain survey responsesThe full text of the questionnaire developed by the

Baker Panel as a (small) component of their very thorough review of BP’s

corporate safety culture, safety management systems, and corporate

safety oversight at its U.S. refineries is available

in an appendix to the report, p.355.

can be analyzed per occupational category.

This type of output typically pleases managers with an engineering background, who tend to feel that it helps them understand the “state” of their organization’s culture. Unfortunately, unless it is complemented by interviews and observations that give the analyst the opportunity to scratch a little deeper below the surface, it can provide a mistaken impression that the situation is acceptable (see box below on Snorre Alpha).

Survey-based assessment methods are affected by significant biases. People tend to respond to questionnaires according to what they think the socially desirable responses are (what they should think and do), rather than their real perception. Furthermore, safety culture surveys are influenced by employees’ concerns as to how the results will be used (“will our unit be punished if our results are bad?”). They are also dependent on the social climate. They often give only a very superficial view of the perception of safety management [Guldenmund 2007]. Furthermore, the weaknesses of surveys become more pronounced when the social climate is poor, because employees become more suspicious of possible consequences of speaking up and revealing their true perceptions of the organizational culture.

Consider the organizational issues that are most frequently identified by the investigations into major industrial accidents: the most significant issues are high-level decision-making, priorities concerning resource allocation and production/safety tradeoffs, top-level leadership practices, lack of learning, and drift to danger. These are all issues that unlikely to be uncovered by perception surveys, in particular due to the biases and fears mentioned above.

A misleading view of an organization’s safety culture provided by questionnaires at Snorre Alpha

Snorre Alpha is an offshore oil and gas platform operated by Statoil (a company now called Equinor) in the North Sea. It suffered a subsea blowout (a buildup of gas from the reservoir blew “out of control”) in 2004, which luckily did not ignite, but which could have led to multiple fatalities. The operating company had run a “safety culture” survey shortly before the accident. Safety researcher Stian Antonsen compared [Antonsen 2009] the results of surveys with the conclusions of two post-accident analyses, an accident investigation undertaken by the regulator and a causal analysis undertaken by the operating company. In the survey, employees reported a very positive perception of the safety culture on the platform. In contrast, the accident investigation undertaken by the safety regulator and the causal analysis undertaken by the operating company provided a radically different view of the situation on the platform, indicating that production pressure often outweighed safety considerations. The reassuring image provided by the safety culture survey had blinded HSE managers to important organizational problems on the platform.

Criticism of the concept

The concept of safety culture, and the way in which it has been adopted by safety professionals in industry, has been the subject of significant criticism:

As the concept has become increasingly popular over the past two decades, both in academia and in industry, it has become increasingly imprecise and conceptually fuzzy [Zhang et al. 2002]. A literature review undertaken by Frank Guldenmund published in the Safety Science journal [Guldenmund 2000] criticized the lack of conceptual coherence of different journal articles referring to safety culture. In a later article [Guldenmund 2010] he writes:

As applied by safety researchers, the culture concept is deprived of much of its depth and subtlety, and is morphed into a grab bag of behavioural and other visible characteristics, without reference to the meaning these characteristics might actually have, and often infused with normative overtones.

Guldenmund suggested that a more relevant and clearly defined concept is organizational culture, and that analysis could be focused on the factors that influence an organization’s culture.

The term “safety culture” has become a vague and globalizing concept used (in particular in high-hazard industries) to talk about all human and organizational factors of safety. It doesn’t help people to ask the difficult questions on the contingent and contextual factors that affect safety. This argument is present in the articles [Hale 2000; Guldenmund 2000; Pidgeon 1998]. Sue Cox and Rhona Flin write that [Cox and Flin 1998]:

Sometimes culture is just a lazy catch all term for a mishmash of practices that are somehow meant to combine to produce a coherent approach to safety… calls for a change in culture are little more than feel good messages.

[Reiman and Rollenhagen 2014] write

To blame an organisation for having a weak safety culture has become almost the equivalent easy response to system problems as was blaming individuals for human errors a few decades ago.

[Grote 2012, 1990] notes that:

When used as part of post hoc explanations for accidents and incidents, safety culture tends to obscure the picture because by focusing attention on very broad assessments of norms and values it distracts from manifest organizational and management problems.

The concept of culture has fairly low descriptive power. The critical article Taming Prometheus: talk about safety and culture [Silbey 2009] describes culture as the “detritus of social transactions”, the negligible residual elements that remain once you have accounted for the impacts of features such as organizational power, hierarchy, inequality, expertise, solidarity between workers, and the role of oversight. Culture names “what is left over after you forgot what it was you were originally trying to learn” [O’Reilly and Chatman 1996]. Charles Perrow wrote in his famous book Normal Accidents [Perrow 1984]:

I have not written anything explicitly on the culture because I doubt its utility. It wasn’t ‘shared values and beliefs’ that overruled safety engineers at Ford and Firestone about dangerous tires; it was top management’s concern for profits that hid the data from US government and lied about it […] Of course there are ‘cultures’ (note the plural) in companies, but on issues of risk and safety I think the issue is really power.

and Perrow later wrote in a foreword to the second edition of the book [Perrow 1999], commenting on the recent profusion of interest in culture in the social sciences,

we miss a great deal when we substitute power with culture.

Thinking in terms of safety culture implies that one believes in the existence of a homogeneous set of values, beliefs and behaviours concerning safety at the level of a large organization. In practice, there are often significant differences between sites of a company, even those located in the same geographic area, and there are generally significant differences between professional groups within a company (executives, engineers/designers, front-line operating staff, maintenance workers, etc.).

Subcultures

The cultures of these subgroups groups are called subcultures in the research literature. Subcultures can develop based on different features distinguishing subgroups:

Within specific professional/occupational groups, due to differences in their social and educational background as well as different activities within the organization. For example, the pilots of an airline will tend to have quite different beliefs and attitudes from the baggage handlers. For an interesting case study of how different professional groups (engineers and site managers) in a construction firm can hold very different beliefs and attitudes regarding the prevention of accidents, see [Gherardi, Nicolini, and Odella 1998].

The geographical location where they work (nationalInfluential research by social psychologist Geert Hofstede studied the impact of national culture on values in the workplace, based on the analysis of a large database of employee value scores collected within IBM between 1967 and 1973, covering 50 countries. He used factor analysis to identify a number of underlying dimensions along which cultural values can be analyzed: individuality vs collectivism, power distance, tolerance of uncertainty, masculinity vs femininity, indulgence vs restraint, long or short term orientation (the latter two dimensions having been added in later studies) [Hofstede 2001]. Hofstede’s work found large differences between countries along these different dimensions. Some research has attempted to determine whether these differences lead to variability in the level of risk-taking behaviours and in safety performance at a national level, and has found that other factors such as perceived management commitment to safety and the efficacy of safety measures have a greater impact [Mearns and Yule 2009].

and regional differences in culture), and sometimes the site or unit (two factories run by the same company located in the same region may have quite different cultures if for instance they used to belong to two different firms).The level of experience/tenure within the company.

There is a strongly instrumental approach to the concept of culture (“improve the culture”, “modify the culture”, and even bizarre expressions such as “implement a safety culture” [Hudson 2007]) which is very present in the work of many HOF consultants and which seems to be widely adopted by safety managers in high-hazard industries. This approach is strongly criticized by many scholars, such as [Martin 1985, 95]:

Culture cannot be managed; it emerges. Leaders don’t create cultures; members of the culture do. Culture is an expression of people’s deepest needs, a means of endowing their experiences with meaning. Even if culture in this sense could be managed, it shouldn’t be […] it is naive and perhaps unethical to speak of managing culture.

and also in [Reynolds 1994]:

Culture is not an ideological gimmick, to be imposed from above by management-consulting firms, but a stubborn fact of human social organization that can scuttle the best of Corporate plans if not first taken into account.

Thinking in terms of “improving” safety culture implies that one believes in the relevance of top-down actions that aim to modify the set of values, beliefs and behaviours concerning safety within a large organization. Adopting this perspective, which celebrates safety as resulting from proclamations made by top managers in a luxurious office, implies that one will spend little time examining conflicts, differences in viewpoint, tradeoffs with other priorities [Henriqson et al. 2014], which are more important in the creation of safety in real complex systems (but much more messy and challenging for managers to address…).

The safety culture concept can lead to a shift in responsibility for appropriate safety behaviours and correct implementation of safety management systems and tools towards front-line personnel (which can be cynically referred to as “empowering front-line workers”). As pointed out by [Hopkins 2005], safety culture is quite often interpreted in industry as concerning individual attitudes with respect to safety, instead of aspects relating to a collective group, and it is often used to defend the implementation of “behaviour-based safety” programmes.

When deployed in a bureaucratic manner, as is common in large companies, it can become a tool for control, or of governmentality in the sense of Foucault [Henriqson et al. 2014].

As the phenomena continually recede before efforts to control them, research advocating safety culture seems, in the end, to suggest that responsibility for the consequences of complex technologies resides in a cultural ether, everywhere or nowhere. [Silbey 2009]

There is a risk that a focus on cultural dimensions of safety (which in fact are often interpreted by managers within industry as the behavioural dimensions of safety), less attention is paid to more effective levers for safety improvement, such as design work on inherent safety and the implementation of technological improvements [Rollenhagen 2010].

Related accidents

The Chernobyl

nuclear disaster highlighted the importance of managerial and human

factors for safety performance. The term “safety culture” was first used

in the INSAG 1 report [INSAG 1986]

released by IAEA in the aftermath of the Chornobyl disaster, to describe

the complacency towards nuclear hazards and the willingness to override

safety control systems and deviate from the planned test procedure

illustrated by that accident.The first INSAG report was based on information

provided by Soviet engineers and nuclear industry managers during a

post-accident review meeting held in 1986. The Soviet representatives

focused heavily on the idea that plant operators had violated several

procedures during the safety test that led to the disaster. Later

investigation determined that the reactor design was structurally

unstable at the low power levels being used during the safety test, and

also had deficient emergency shutdown mechanism (the power plays in the

Soviet bureaucracy that affected how this accident was presented to the

world form the backdrop to the critically acclaimed 2019 miniseries

Chernobyl). The IAEA later released an updated report, called

INSAG 7 [INSAG 1992], which

provided a more complete picture of the factors that led to the

disaster. The updated report also pointed out that the deficit in safety

culture affected not only the operations in Soviet nuclear power plants,

but also the design, engineering and regulation of these

facilities.

The Chernobyl

nuclear disaster highlighted the importance of managerial and human

factors for safety performance. The term “safety culture” was first used

in the INSAG 1 report [INSAG 1986]

released by IAEA in the aftermath of the Chornobyl disaster, to describe

the complacency towards nuclear hazards and the willingness to override

safety control systems and deviate from the planned test procedure

illustrated by that accident.The first INSAG report was based on information

provided by Soviet engineers and nuclear industry managers during a

post-accident review meeting held in 1986. The Soviet representatives

focused heavily on the idea that plant operators had violated several

procedures during the safety test that led to the disaster. Later

investigation determined that the reactor design was structurally

unstable at the low power levels being used during the safety test, and

also had deficient emergency shutdown mechanism (the power plays in the

Soviet bureaucracy that affected how this accident was presented to the

world form the backdrop to the critically acclaimed 2019 miniseries

Chernobyl). The IAEA later released an updated report, called

INSAG 7 [INSAG 1992], which

provided a more complete picture of the factors that led to the

disaster. The updated report also pointed out that the deficit in safety

culture affected not only the operations in Soviet nuclear power plants,

but also the design, engineering and regulation of these

facilities.

The analysis of INSAG built upon earlier work on the

organizational dimensions of safety, such as the work of Barry Turner

[Turner 1978]. (We

will use the Ukrainian spelling Chornobyl, rather than the Russian term

Chernobyl.)

There has been some discussion of the idea that attributing the

Chornobyl accident to cultural features specific to Soviet countries was

a way of positing a difference with the nuclear industry as run in

Western countries, and a way of reassuring citizens that “it couldn’t

happen here”.[Pidgeon 1998]

points out that the hypothesis of “poor safety culture” constituting a

significant contributing factor “stemmed much more from a rhetorical

attempt to reassure Western publics that Chornobyl could not happen

here, than from any direct or systematic social science analysis of the

deep and complex issues involved in this question”. He also notes

ironically that “some 6 years previously, Soviet nuclear engineers had

concluded from their analysis of the Three Mile Island incident that

such an event could not happen in the Soviet Union because the culture

there emphasized safety over production and short-term return on

capital”.

Other authors argue [Theureau 2011] that it was a way for the

IAEA to avoid interfering (or being perceived to interfere) in Russian

politics, which were in an unstable state at the time.

Other accidents in which safety culture issues have been put forward

as contributing factors include Piper

Alpha,The report of the public inquiry into the Piper Alpha

accident, headed by Lord Cullen, states that “It is essential to create

a corporate atmosphere or culture in which safety is understood to be

and accepted as, the number one priority”.

BP Texas City, Usumacinta, Montara, Columbia (CAIB wrote

about a “broken safety culture” at NASA), Sikorsky S-92A, and Deepwater

Horizon.

Conclusion

From a research point of view, the influence of features of the organizational culture on safety performance is clearly an important topic. Unfortunately, much research has attempted to analyze a separate and hypothesized set of characteristics called the safety culture, without attempting to understand existing research on organizational culture. There has been a profusion of analysis based on poorly defined concepts and weak theoretical underpinnings.

From the practitioner viewpoint, the situation is worse. The widespread adoption of this concept by management consultants as an easy way to make money selling questionnaire services has not helped to promote clarity, and has sometimes given managers a misleading impression that they understood the “state” of their organizational culture. It has also been misinterpreted by some managers as providing evidence for blaming individual workers and instituting behavioural safety programmes. In fairness, some experts and consultants use the interpretive flexibility of the term safety culture as a “foot in the door” in initial discussions with managers, then go on to provide useful advice on managing the organizational dimensions of safety, but anecdotal evidence available to the author suggests that this is unfortunately a minority position.

References

Antonsen, Stian. 2009. Safety culture assessment: A mission impossible? Journal of Contingencies and Crisis Management 17(4):242–254. [Sci-Hub 🔑].

Baker, James A., Nancy Leveson, F. L. Bowman, S. Priest, G. Erwin, I. Rosenthal, S. Gorton, et al. 2007. The report of the B.P. US refineries independent safety review panel. Baker Panel. https://www.documentcloud.org/documents/70604-baker-panel-report.

Clarke, Lee. 1993. Drs Pangloss and Strangelove meet organizational theory: High reliability organizations and nuclear weapons accidents. Sociological Forum 8(4):675–689.

Cox, Sue J., and Rhona Flin. 1998. Safety culture: Philosopher’s stone or man of straw? Work and Stress 12(3):189–201. [Sci-Hub 🔑].

Eeckelaert, Lieven, Annick Starren, Arjella van Scheppingen, David Fox, and Carsten Brück. 2011. Occupational safety and health culture assessment - a review of main approaches and selected tools. Working environment information working paper. EU-OSHA, https://osha.europa.eu/en/publications/occupational-safety-and-health-culture-assessment-review-main-approaches-and-selected/.

Gherardi, Silvia, Davide Nicolini, and Francesa Odella. 1998. What do you mean by safety? Conflicting perspectives on accident causation and safety management in a construction firm. Journal of Contingencies and Crisis Management 6(4). [Sci-Hub 🔑].

Grote, Gudela. 2012. Safety management in different high-risk domains – all the same? Safety Science 50(10):1983–1992. [Sci-Hub 🔑].

Guldenmund, Frank W. 2000. The nature of safety culture: A review of theory and research. Safety Science 34(1-3):215–257. [Sci-Hub 🔑].

Guldenmund, Frank W. 2007. The use of questionnaires in safety culture research – an evaluation. Safety Science 45(6):723–743. [Sci-Hub 🔑].

Guldenmund, Frank W. 2010. (Mis)understanding safety culture and its relationship to safety management. Risk Analysis 30(10):1466–1480. [Sci-Hub 🔑].

Hale, Andrew R. 2000. Culture’s confusions. Safety Science 34(1-3):1–14. [Sci-Hub 🔑].

Henriqson, Éder, Betina Schuler, Roel van Winsen, and Sidney W. A. Dekker. 2014. The constitution and effects of safety culture as an object in the discourse of accident prevention: A Foucauldian approach. Safety Science 70:465–476. [Sci-Hub 🔑].

Hofstede, Geert. 2001. Culture’s consequences: Comparing values, behaviors, institutions, and organizations across nations. 2nd ed. Sage,

Hopkins, Andrew. 2005. Safety, culture and risk: The organisational causes of disasters. Australia. CCH,

Hudson, Patrick. 2007. Implementing a safety culture in a major multi-national. Safety Science 45(6):697–722. [Sci-Hub 🔑].

INSAG. 1986. Summary report on the post-accident review meeting on the Chernobyl accident, INSAG 1. STI/PUB/740. Vienna. International Nuclear Safety Advisory Group, IAEA. https://www.iaea.org/publications/3598/summary-report-on-the-post-accident-review-meeting-on-the-chernobyl-accident.

INSAG. 1992. The Chernobyl accident: Updating of INSAG-1, INSAG-7. STI/PUB/913. Vienna. International Nuclear Safety Advisory Group, IAEA. https://www.iaea.org/publications/3786/the-chernobyl-accident-updating-of-insag-1.

Janis, Irving L. 1972. Victims of groupthink: A psychological study of foreign-policy decisions and fiascoes. Houghton Mifflin,

Martin, J. 1985. Can organizational culture be managed? In Organizational Culture, edited by Peter J. Frost. Beverly Hills. Sage Publications,

Mearns, Kathryn J., Rhona Flin, Rachale Gordon, and Mark Fleming. 1998. Measuring safety climate on offshore installations. Work and Stress 12(3):238–254. [Sci-Hub 🔑].

Mearns, Kathryn, and Steven Yule. 2009. The role of national culture in determining safety performance: Challenges for the global oil and gas industry. Safety Science 47(6):777–785. [Sci-Hub 🔑].

Moghaddam, Fathali M., and David S. Crystal. 1997. Revolutions, samurai, and reductions: The parodoxes of change and continuity in Iran and Japan. Political Psychology 18(2):355–384. [Sci-Hub 🔑].

O’Reilly, Charles A., and Jennifer A. Chatman. 1996. Culture as social control: Corporations, cults, and commitment. Research in Organizational Behaviour 18:157–200.

Perrow, Charles. 1984. Normal accidents: Living with high-risk technologies. New York. Basic Books,

Perrow, Charles. 1999. Normal accidents: Living with high-risk technologies. Princeton. Princeton University Press,

Pidgeon, Nick F. 1998. Safety culture: Key theoretical issues. Work and Stress 12(3):202–216. [Sci-Hub 🔑].

Pidgeon, Nick F., and Michael O’Leary. 2000. Man-made disasters: Why technology and organizations (sometimes) fail. Safety Science 34(1-3):15–30. [Sci-Hub 🔑].

Putnam, Robert D. 1993. Making democracy work: Civic traditions in modern Italy. Princeton University Press,

Reason, James. 1990. Human error. Cambridge University Press,

Reiman, Teemu, and Carl Rollenhagen. 2014. Does the concept of safety culture help or hinder systems thinking in safety? Accident Analysis and Prevention 68:5–15. [Sci-Hub 🔑].

Reynolds, P. C. 1994. Corporate culture on the rocks. In Anthropological Perspectives on Organizational Culture, edited by H. A. Sibley. University Press of America.

Rollenhagen, Carl. 2010. Can focus on safety culture become an excuse for not rethinking design of technology? Safety Science 48(2):268–278. [Sci-Hub 🔑].

Schein, Edgar H. 1985. Organisational culture and leadership. Jossey-Bass Publishers,

Schwartz, Shalom, Anat Bardi, and Gabriel Bianchi. 2000. Value adaptation to the imposition and collapse of communist regimes in Eastern Europe. In Political Psychology: Cultural and Cross Cultural Foundations, edited by John Duckitt and Stanley A. Renshon, 217–237. Macmillan. [Sci-Hub 🔑].

Silbey, Susan S. 2009. Taming Prometheus: Talk about safety and culture. Annual Review of Sociology 35(1):341–369. [Sci-Hub 🔑].

Sull, Donald, Stefano Turconi, and Charles Sull. 2020. When it comes to culture, does your company walk the talk? MIT Sloan Management Review. https://sloanreview.mit.edu/article/when-it-comes-to-culture-does-your-company-walk-the-talk/.

Theureau, Jacques. 2011. La relation entre culture et sûreté dans une éventuelle ingénierie des situations sûres. http://www.coursdaction.fr/08-nonpublies/2011-JT-T24.pdf.

Turner, Barry A. 1978. Man-made disasters. London. Wykeham Publications,

Turner, Barry A. 1992. The sociology of safety. In Engineering Safety, edited by David I. Blockley, 165–178. London. McGraw-Hill,

Westrum, Ron. 2004. A typology of organisational cultures. BMJ Quality & Safety 13(suppl 2):ii22–ii27. [Sci-Hub 🔑].

Zhang, Hui, Douglas A. Wiegmann, Terry L. von Thaden, Gunjan Sharma, and Alyssa A. Mitchell. 2002. Safety culture: A concept in chaos? In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 46:1404–1408. 15. [Sci-Hub 🔑].

Zohar, Dov M. 1980. Safety climate in industrial organizations: Theoretical and applied implications. Journal of Applied Psychology 65(1):96–102. [Sci-Hub 🔑].

Zohar, Dov M., and David A. Hofmann. 2012. Organizational culture and climate. In The Oxford Handbook of Organizational Psychology, Vol. 1, edited by Steve W. J. Kozlowski, 643–666. Oxford University Press,

Published:

Last updated: